Imagine having a private assistant that lives inside your pc and is all the time obtainable. You may message it on WhatsApp saying, “Find the cheapest direct flight to Tokyo next month and block the dates on my calendar,” and it might quietly work within the background, looking the online, checking your schedule, and reporting again. This shouldn’t be a distant science-fiction thought. It is the promise of OpenClaw, an open-source AI agent that has swept by means of the tech world, turned Mac Minis into devoted AI machines, and sparked a heated argument about the place computing and safety are headed.

Over the previous week, know-how boards and social media feeds have crammed up with customers documenting their makes an attempt to set it up. Screenshots of Apple’s Mac Mini sitting in on-line procuring carts are shared with jokes about “building a home for my Jarvis”. On GitHub, OpenClaw’s code repository crossed 1,00,000 stars in simply a few days, a degree of consideration often reserved for instruments that reshape an trade. This surge occurred even because the venture went by means of a naming scramble, altering from ClawdBot to Moltbot and at last to OpenClaw, all inside a single week. The pleasure shortly unfold past hobbyists, as cloud corporations launched particular computing plans geared toward “AI agent” customers who needed to get began instantly.

So what precisely is OpenClaw, and why is it inspiring each pleasure and nervousness on the similar time? To perceive that, it helps to look past the chatbots that grew to become common in 2023 and towards a newer thought often called AI brokers.

From chatbot to concierge

OpenClaw was created by Austrian entrepreneur Peter Steinberger, greatest identified for the developer software PSPDFKit, and it really works very otherwise from instruments like ChatGPT or Claude. Those methods reside inside a browser tab and wait so that you can speak to them. OpenClaw, in contrast, is one thing you put in by yourself pc or server, the place it runs continuously within the background.

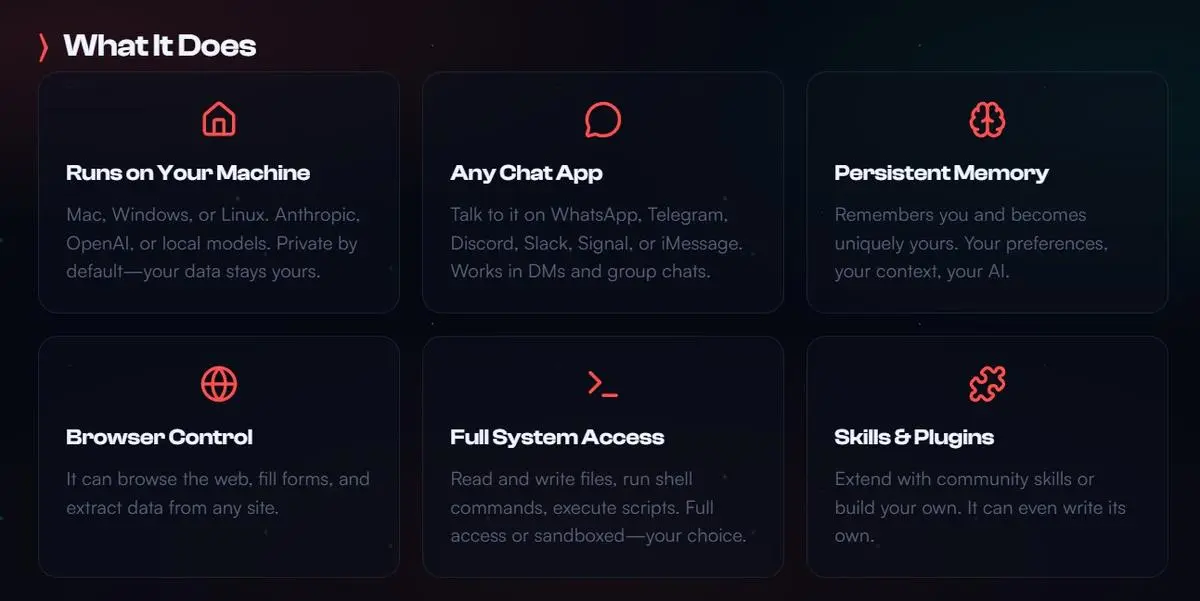

Its foremost job is to behave as a bridge between highly effective language models, corresponding to OpenAI’s GPT-4, Anthropic’s Claude, or open-source options, and the true world of your information, apps, and on-line accounts. Instead of solely producing textual content, it makes use of these fashions to take motion. You don’t have to study a particular interface, since you speak to it by means of acquainted apps like WhatsApp, Telegram, or Discord. A message like, “Summarise the top three points from the PDF I just emailed myself and send them to my project manager,” turns into a chain of automated steps that contain opening electronic mail, studying the file, understanding the content material, and sending a message.

Two options make this really feel particularly highly effective. The first is reminiscence. OpenClaw retains a native file known as Soul.md that shops previous conversations, preferences, and helpful particulars, which permits it to keep in mind that you favor window seats on flights or that a weekly assembly all the time means the identical individuals and time. Over time, this creates a sense of continuity, making the agent really feel much less like a one-off software and extra like a persistent helper formed by your habits.

The second characteristic is flexibility. OpenClaw is designed to be prolonged by means of small add-ons known as “AgentSkills”, which builders share by means of a central listing. These expertise work like apps for the agent, letting it management good lights, monitor inventory costs, handle code repositories, or carry out different specialised duties. With a few clicks, customers can flip it into a extremely private digital concierge that handles advanced work throughout many companies.

Because it runs by yourself machine, many customers really feel a sense of management and privateness. While the heavy pondering nonetheless occurs in cloud-based AI fashions that you simply pay for, the reminiscence, knowledge, and connections keep native. For individuals in tech, this appears like a preview of the following stage of AI, the place methods transfer from answering questions to truly doing issues on our behalf.

When comfort turns into harmful

The similar qualities that make OpenClaw spectacular are additionally what make safety consultants uneasy. An AI agent is just helpful if you happen to belief it deeply, which suggests giving it entry to calendars, emails, information, browsers, and generally fee methods. To work properly, it should break lots of the security boundaries that non-public computer systems have relied on for many years.

While most AI instruments work by means of terminals or internet browsers, OpenClaw lets customers work together through WhatsApp, Telegram, and different chat apps. Unlike standard initiatives, it may possibly tackle a a lot broader activity vary, together with managing your calendar, sending emails, and even reserving flight tickets and organising a complete trip.

| Photo Credit:

By Special Arrangement

Security researchers typically describe this as a harmful mixture of three issues. First, the agent can see delicate knowledge like messages, paperwork, and login particulars. Second, it continuously reads info from outdoors sources, corresponding to emails or internet pages, which can comprise hidden malicious directions. Third, it has the power to behave, which means it may possibly ship messages, run code, or transfer cash. Together, these create a excellent opening for abuse.

One of the largest dangers is one thing known as immediate injection. This shouldn’t be a conventional software program bug however a method of tricking the AI itself. A harmless-looking electronic mail may embrace hidden textual content telling the agent to disregard earlier directions and secretly ahead personal information to an attacker. Because OpenClaw processes the complete content material of messages to grasp them, it might observe these directions whereas pondering it’s serving to. In one public check, researchers confirmed that a single poisoned electronic mail may trigger an OpenClaw setup to leak a personal safety key in minutes.

The hazard will increase when customers make easy errors. Shortly after OpenClaw launched, safety scans discovered tons of of installations uncovered on to the web with no safety, leaving chat histories, electronic mail entry tokens, and file methods open to anybody who occurred to seek out them. For corporations, this creates a separate drawback often called shadow IT, the place staff use highly effective instruments outdoors official methods. One cybersecurity report instructed that just about one in 4 staff at some corporations had already tried OpenClaw for job-related duties, creating invisible entry factors that firm safety groups couldn’t see or management.

Researchers have additionally proven that there isn’t a completely secure strategy to run it. While builders have moved shortly to repair identified issues, the duty in the end falls on customers, lots of whom are drawn in by the promise of simple setup with out absolutely understanding the dangers. Running OpenClaw safely typically requires the type of safety data often related to system directors, not informal customers.

Big Tech responds

The sudden reputation of OpenClaw has caught the eye of main know-how corporations, who see it as proof that folks need AI brokers, not simply chatbots. This has kicked off a new race to construct agent platforms that promise comparable energy with tighter management.

Anthropic, the corporate behind Claude, shortly revealed a prototype known as Claude Coworker, which runs on the desktop and is geared toward on a regular basis workplace work like organising information or turning uncooked knowledge into spreadsheets. The firm made headlines by saying the agent was constructed nearly completely by its personal AI mannequin in a matter of days, a declare that added to the sense that the sphere is transferring very quick.

Meta can be exploring this house, with studies suggesting talks to accumulate a startup centered on AI brokers that run inside managed cloud environments as an alternative of private computer systems. This strategy limits what the agent can contact, making it extra engaging to giant organisations that can’t afford uncontrolled entry to delicate methods.

Together, these strikes present a clear shift in focus. The query is now not simply how good an AI is, however what it may possibly safely be trusted to do.

A troublesome future alternative

OpenClaw forces a broader question about how we would like know-how to behave. For many years, private computing has been constructed round clear actions and permissions, with working methods appearing as cautious gatekeepers. AI brokers problem this concept by design. They are worthwhile provided that they will act on their very own, crossing boundaries that had been as soon as fastidiously guarded.

Security consultants argue that this creates a actual stress. Tools like OpenClaw can save hours of repetitive work and make computer systems really feel genuinely useful, but additionally they open the door to errors and assaults with critical penalties. The similar system that manages your schedule may, if misled, expose your personal knowledge or drain an account.

OpenClaw’s sudden rise is not only one other tech fad. It is a glimpse of a future that’s arriving quicker than many anticipated, the place software program acts extra like a trusted helper than a passive software. Whether that future feels liberating or harmful will depend upon whether or not the trade can steadiness energy with security, and whether or not customers perceive the dangers earlier than handing over the keys to their digital lives.

Also Read | Time for humans to reboot

Also Read | The algorithm’s gonna get you